This week there is the RIPE 46 meeting, once again in Amsterdam. Note that if you can't attend, you can follow what's happening using the experimental streaming service. The streaming bandwidth is around 225 kbps for the highest quality but you can fall back to lower quality or audio only, I think. It works both with Windows Mediaplayer and Video Lan Client on my Mac.

There are also archives of the streamed sessions. The presentation slides are also generally available.

Permalink - posted 2003-09-01

There are now VoIP phones that cost about 60 - 75 dollars/euros and there is the free Asterisk "Open Source Linux PBX" software.

Unfortunately, the room didn't seem all that receptive. Well, too bad. I guess the routing crowd isn't ready for IPv6 stuff in general and somewhat non-mainstream IPv6 stuff in particular.

After that there was an hour-by-hour account from a Cisco incident response guy (Damir Rajnovic) about the input queue locking up vulnerability that got out in june. It seems they spend a lot of time keeping this under wraps. I'm not sure if I'm very happy about this. One the one hand, it gives them time to fix the bug, on the other hand our systems are vulnerable without us knowing about it. They went public a day or so earlier than they wanted because there were all kinds of rumours floating around. It turned out that keeping it under wraps wasn't entirely a bad idea as there was an exploit within the hour after the details got out. But I'm not a huge fan of them telling only tier-1 ISPs that "people should stay at the office" and then disclosing the information to them first. It seems to me that announcing to everyone that there will be an announcement at some time 12 or 24 hours in the future makes more sense. In the end they couldn't keep the fact that something was going on under wraps anyway. And large numbers of updated images with vague release notes also turned out to be a big clue to people who pay attention to such things. One thing was pretty good: it seems that Cisco itself is now working on reducing the huge numbers of different images and feature sets, because this makes testing hell.

Permalink - posted 2003-09-01

The power outage in parts of Canada and the US a little over a week ago didn't cause too many problems network-wise. (I'm sure the people who were stuck in elevators, had to walk down 50 flights of stairs or walk from Manhattan to Brooklyn or Queens have a different take on the whole thing.) The phone network in general also experienced problems, mostly due to congestion. The cell phone networks were hardly usable.

There was a 2% or so decline in the number of routes in the global routing table. The interesting thing is that not all of the 2500 routes dropped off the net immediately, but some did so over the course of three hours. This would indicate depleting backup power.

See the report by the Renesys Corporation for more details.

Following the outage there was (as always) a long discussion on NANOG where several people expressed surprise about how such a large part of the power grid could be taken out by a single failure. The main reason for this is the complexity in synchronizing the AC frequencies in different parts of the grid. Quebec uses high voltage direct current (HVDC) technology to connect to the surrounding grids, and wasn't affected.

In the mean time the weather in Europe has been exceedingly hot. This gives rise to cooling problems for many power plants as the river water that many of them use gets too warm. In Holland, power plants are allowed to increase the water temperature by 7 degrees Celsius with a maximum outlet temperature of 30 degrees. But the input temperature got as high as 28 degrees in some places. So many plants couldn't work at full capacity (even with temporary 32 degree permits), while electricity demand was higher than usual, also as a result of the heat. (The high loads were also an important contributor to the problems in the US and Canada as there was little reserve capacity in the distribution grids.) The plans for rolling blackouts were already on the table when the weather got cooler over the last week.

Moral of these stories: backup power is a necessity, and batteries alone don't hack it, as the outage lasted for three days in some areas.

Permalink - posted 2003-08-24

The worm situation seems to be getting worse, but fortunately worm makers fail to exploint the full potential of the vulnerabilities they use. For instance, we were all waiting to see what would happen to the Windows Update site when the "MS Blaster" worm was going to attack it on august 16th. But nothing happened. I read that Microsoft took the site offline to avoid problems, having no intention to bring it back online again, but obviously this information was incorrect because they're (back?) online now.

However, Microsoft received help from another worm creator who took it upon him/herself to fix the security hole that Blaster exploits, by first exploiting the same vulnerability, then removing Blaster and finally downloading Microsoft's patch. But in order to help scanning, the new worm (called "Nachi") first pings potential target, leading to huge ICMP floods in some networks, although others didn't see much traffic generated by the new worm. Nachi uses an uncommong ICMP echo request packet size of 92 bytes, which makes it possible to filter the worm without having to block all ping traffic. See Cisco's recommendations. It seems TNT dial-up concentrators have a hard time handling this traffic and reboot periodically. The issue seems to be lack of memory/CPU to cache all the destinations the worm tries to contact, just like what happened with the MS SQL worm earlier this year and others before it.

Then there's the Sobig worm. This one uses email to spread, which has two advantages: the users actually see the worm so they can't ignore the issue and the network impact is negligible as the spread speed is limited by mail server capacity. Unfortunately, this one forges the source with email addresses found on the infected computer, which means "innocent bystanders" receive the blame for sending out the worm. Interestingly enough, I haven't received a single copy of either this worm or its backscatter so far, even though it seems to be rather aggressive, many people report receiving lots (even thousands) of copies.

Then on friday there was a new development as it was discovered that Sobig would contact 20 IP addresses at 1900 UTC, presumably to receive new instructions/malicious code. 19 of the addresses were offline by this time, and the remaining one immediately became heavily congested. So far, nobody has been able to determine what was supposed to happen, but presumably, it didn't.

There was some discussion on the NANOG list about whether it's a good idea to block TCP/UDP ports to help stop or slow down worms. The majority of those who posted their opinion on the subject feel that the network shouldn't interfere with what users are doing, unless the network itself is at risk. This means temporary filters when there is a really aggressive worm on the loose, but not permanently filtering every possible vulnerable service. However, a sizable minority is in favor of this. But apart from philosophical preferences, it makes little sense to do this as the more you filter, the bigger the impact for legitimate users, and it has been well-established that worms manage to bypass filters and firewalls, presumably through VPNs or because people bring in infected laptops and connect them to the internal network.

Something to look forward to: with IPv6, there are so many addresses (even in a single subnet) that simply generating random addresses and see if there is a vulnerable host there isn't a usable approach. On the other hand, I've already seen scans on a virtual WWW server which means that the scanning happened using the DNS name rather than the server's IP address, so it's unlikely that the IPv6 internet will remain completely wormfree, even if things won't be as bad as they're now in IPv4.

Permalink - posted 2003-08-24

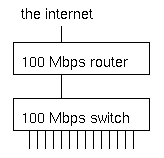

What's wrong with this picture?

Under normal circumstances, not much. But when several machines are infected with an aggressive worm or participating in a denial of service attack when an attacker has compromised them, the switch will receive more traffic from the hosts that are connected to the 100 Mbps ports than it can transmit to the router, which also has a 100 Mbps port.

The result is that a good part of alll traffic is dropped by the switch. This doesn't matter much to the abusive hosts, but the high packet loss makes it very hard or even impossible for the other hosts to communicate over the net. We've seen this happen with the MS SQL worm in january of this year, and very likely the same will happen on august 16th when machines infected with the "Blaster" worm (who comes up with these silly names anyway?) start a distributed denial of service attack on the Windows Update website. Hopefully the impact will be mitigated by the advance warning.

So what can we do? Obviously, within our own networks we should make sure hosts and servers aren't vulnerable, not running and/or exposing vulnerable services and quickly fixing any and all infections. However, for service or hosting providers it isn't as simple, as there will invariably be customers that don't follow best practices. Since it very close to impossible to have a network without any places where traffic is funneled/aggregated, it's essential to have routers or switches that can handle the full load of all the hosts on the internal network sending at full speed and then filter this traffic or apply quality of service measures such as rate limiting or priority queuing.

This is what multilayer switches and layer 3 switches such as the Cisco 6500 series switches with a router module or Foundry, Extreme and Riverstone router/switches can do very well, but these are obviously significantly more expensive than regular switches. It should still be possible to use "dumb" aggregation switches, but only if the uplink capacity is equal or higher than the combined inputs. So a 48 port 10/100 switch that connects to a filter-capable router/switch with gigabit ethernet, could support 5 ports at 100 Mbps and the remaining 43 ports at 10 Mbps. In practice a switch with 24 or 48 100 Mbps ports and a gigabit uplink or 24 or 48 10 Mbps ports with a fast ethernet uplink will probably work ok, as it is unlikely that more than 40% or even 20% of all hosts are going to be infected at the same time, but the not uncommon practice of aggregating 24 100 Mbps ports into a 100 Mbps uplink is way too dangerous these days.

Stay tuned for more worm news soon.

Permalink - posted 2003-08-14